The AI Agent Trust Gap: Why Your Brain Says No

The AI agent wars are officially here. Amazon expanded Rufus. OpenAI embedded checkout into ChatGPT. Perplexity launched an AI shopping browser (and promptly got sued by Amazon for it). Hotels are deploying humanoid robots. Programmatic ad platforms are going "agentic."

Everyone's building agents. Almost nobody's asking the harder question: do consumers actually want them?

The Availability Heuristic Gets Inverted

Here's something interesting from behavioral economics: we've all heard of the availability heuristic, the tendency to judge probability by how easily examples come to mind. But BehavioralEconomics.com recently introduced a fascinating inversion they call "UnAvailability Bias."

The idea is simple but profound. In an age of information abundance, what we don't see (when we expect to see it) becomes evidence of impossibility. If something isn't immediately findable, we assume it doesn't exist.

This matters for AI agents because the trust gap isn't just about what agents can do. It's about what consumers expect to see and don't.

When a shopping agent recommends a product, where's the social proof? Where are the reviews, the "people like you bought this," the signals that activate our pattern matching brains? The agent might have processed millions of data points. But if it can't show its work in a way that feels familiar, our brains treat that absence as a red flag.

The Shopping Agent Reality Check

Modern Retail's recent analysis of the AI shopping agent landscape reveals some sobering numbers. Shopping queries represent only about 2% of ChatGPT usage. That's roughly 50 million daily queries, which sounds impressive until you realize only one third of consumers say they'd actually complete a purchase through an answer engine.

The obstacles aren't technical. They're psychological.

Amazon's CEO Andy Jassy admitted that "most AI shopping agents fail to provide a satisfactory customer experience." They lack personalization. Pricing is often wrong. Delivery estimates miss the mark.

But here's the deeper problem: most merchants structure their product catalogs for keyword search, not the rich contextual information AI agents need. An agent needs to know about fit, quality, durability, and inventory levels. Merchants consider this proprietary. So the agent either guesses or gives generic answers.

And our brains notice. Immediately.

The Hotel Robot Problem

The hospitality industry offers a parallel example. Hotel Dive reports on the growing deployment of "humanoid, AI powered agents" to handle operations. Concierge robots. Automated check in. AI powered room service recommendations.

The pitch is compelling: enhance guest satisfaction while addressing labor challenges. The reality is messier.

Hotels have something retail doesn't: captive attention. When you're standing in a lobby, you'll interact with whatever's there. But even in this favorable environment, the trust question remains. Does the robot actually understand my preference, or is it following a script? Can it handle an unusual request, or will I end up frustrated and asking for a human anyway?

The technology can handle routine interactions. The question is whether routine interactions are where customer loyalty gets built.

The Agentic Advertising Play

Meanwhile, PubMatic just launched something called "AgenticOS" for programmatic advertising. The promise: cut down time spent on campaign setup and troubleshooting so teams can focus on strategy.

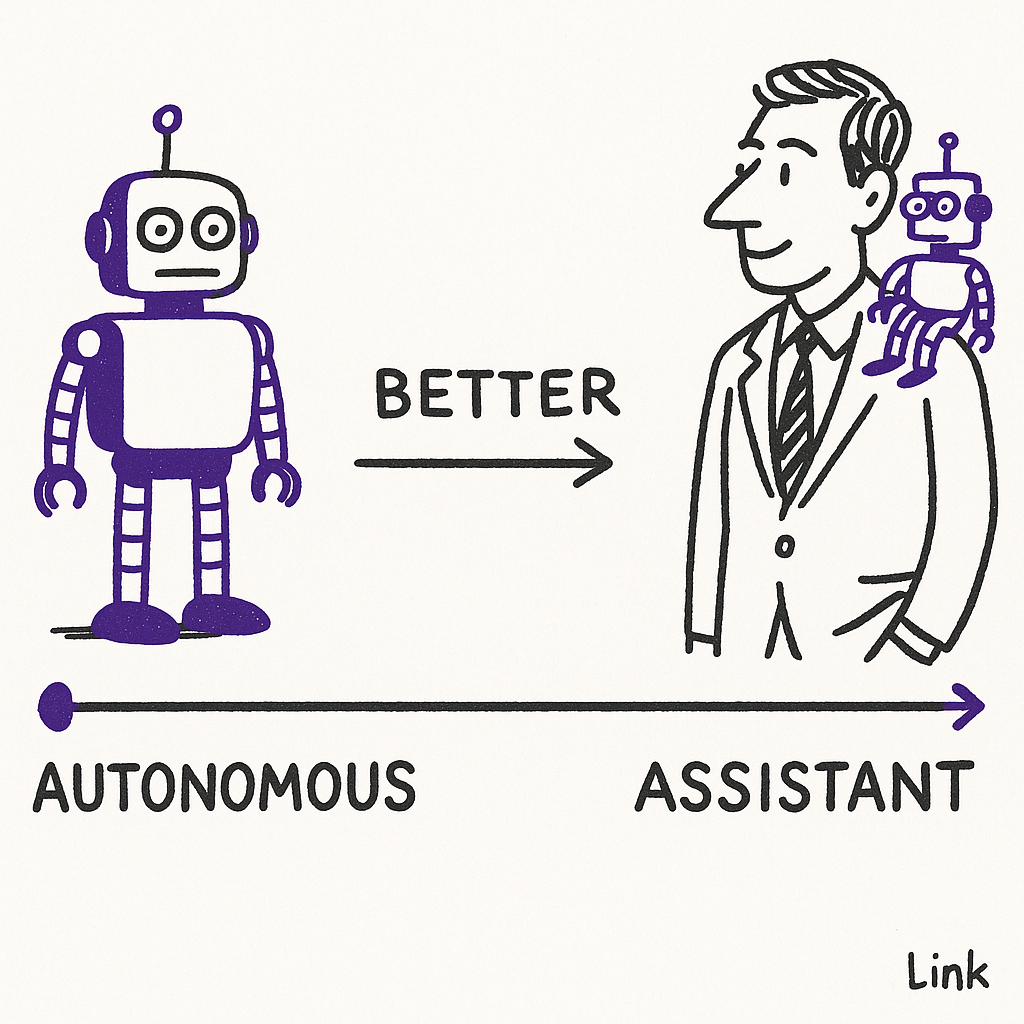

This is a telling reframe. Rather than promising AI agents that replace human judgment, PubMatic is positioning them as tools that handle grunt work. Let the agent manage the pipes. Let the humans decide what flows through them.

This might be the more realistic near term path for enterprise AI agents. Not autonomous decision makers, but sophisticated assistants that operate within guardrails humans set.

The same principle could apply to local brand partnerships. An AI agent that grades potential partners, surfaces opportunities, and handles initial research? Valuable. An AI agent that decides which partnerships to pursue and negotiates terms? Premature.

What This Means for Brands

If you're a brand evaluating how AI agents fit into your strategy, here's the uncomfortable truth: the technology is ahead of consumer readiness.

The availability heuristic tells us people judge by what they can easily recall. AI agents work in ways that are fundamentally opaque. They don't show their work in ways that trigger familiar trust signals.

The practical implication: anywhere trust matters (which is everywhere in consumer relationships), AI agents need to be wrapped in human context. The agent does the heavy lifting. The human interface provides the trust signals.

For local brand partnerships specifically, this means AI can absolutely help identify and evaluate opportunities. It can process more data than any human team. But the actual relationship, the thing that makes local partnerships valuable in the first place, still needs human faces and human judgment.

The companies that figure this out will use AI agents to scale research and efficiency while preserving the human touchpoints that actually build trust. The companies that don't will deploy impressive technology that consumers politely ignore.

Sources

- The Modern Peril of the Availability Heuristic - BehavioralEconomics.com

- Why the AI shopping agent wars will heat up in 2026 - Modern Retail

- PubMatic debuts agentic platform to address programmatic's AI headaches - Marketing Dive

- How humanoid, AI-powered agents are reshaping hotel operations - Hotel Dive

Hass Dhia is Chief Strategy Officer at Smart Technology Investments, where he helps brands find authentic local activation partnerships powered by neuroscience and AI. He holds an MS in Biomedical Sciences from Wayne State University School of Medicine, with thesis research in neuroscience.